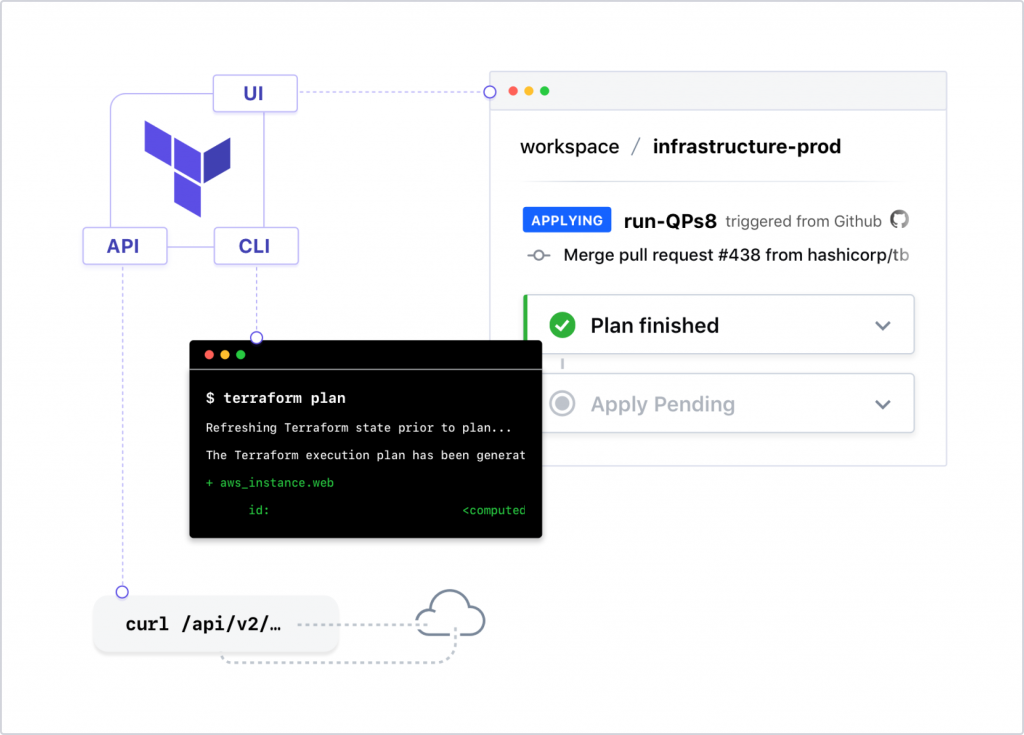

On its website Terraform is defined as:

Terraform provides a common configuration to launch infrastructure — from physical and virtual servers to email and DNS providers. Once launched, Terraform safely and efficiently changes infrastructure as the configuration is evolved.

Simple file based configuration gives you a single view of your entire infrastructure.

This makes Terraform very appealing for us as it allows us to work with multiple cloud providers whilst maintaining a single code base. For us at Gluo this makes Terraform very appealing as it would allow us to work with multiple cloud providers with just one application.

In the future we plan to work with multiple cloud providers as we see more and more businesses moving to the cloud and not just sticking one provider for all their services but rather selecting a service which is most complimentary with their business needs and being independent of cloud providers.

Therefore, we decided to have a look at how Terraform can help us in these cases.

In our first trial we decided to set up a mixed environment with multiple accounts on AWS and Azure.

AZURE

On the Azure side we have a single virtual instance, which will function as a database server for the application running in AWS.

This server sits behind a security group which only allows MySQL traffic from the AWS instance and SSH trafic for our company network.

As you can see below the setup of the virtual machine is simple and explains itself.

You just have to declare parameters like the type of instance, OS, location, credentials, etc.

resource "azure_instance" "MysqlServer" {

name = "${var.azure_instance_name}"

image = "Ubuntu Server 14.04 LTS"

size = "Basic_A1"

location = "North Europe"

username = "${var.azure_instance_username}"

password = "${var.azure_instance_password}"

storage_service_name = "gluoblog"

security_group = "dbservers"

virtual_network = "blog-network"

subnet = "blognet"

endpoint {

name = "SSH"

protocol = "tcp"

public_port = 22

private_port = 22

}

}

$ <-- this has to goWe also had to create a virtual network with a subnet and a security group. The security group is defined by using the resource azure_security_group.

Next we made 2 security groups : One to allow ssh access from our company network and the other to allow MySQL access from the public IP of the AWS instances.

resource "azure_security_group_rule" "mysql-access" {

name = "mysql-access-rule"

security_group_names = ["${azure_security_group.dbservers.name}"]

type = "Inbound"

action = "Allow"

priority = 300

source_address_prefix = "*"

source_port_range = "${aws_instance.blogpost_nat.public_ip}"

destination_address_prefix = "10.1.2.0/24"

destination_port_range = "80"

protocol = "TCP"

}Again the code explains itself, you can see that we use the variable: azure_security_group.dbservers.name to allow the public ip of the AWS NAT instance. We allow acces to all the resources in the 10.1.2.0/24 subnet in our Azure subnet.

Next we also had to define a virtual network, which again has really self explanatory code. You choose the location, create subnets etc.

resource "azure_virtual_network" "default" {

name = "blog-network"

address_space = ["10.1.2.0/24"]

location = "North Europe"

subnet {

name = "blognet"

address_prefix = "10.1.2.0/25"

}

}The last thing we have to create is a storage service for the VM to be hosted on. This can be done by usign the resource azure_storage_service Below is our configuration..

resource "azure_storage_service" "gluo-blog" {

name = "gluoblog"

location = "North Europe"

description = "Made by Terraform."

account_type = "Standard_LRS"

}please note that for this configuration we have used the “Azure Provider” API.

AWS

We will be using 2 separate accounts as an AWS provider to demonstrate Terraform’s ability to use multiple accounts as well as multiple providers. To use 2 accounts of the same provider we have to set up our variables file to accept 2 different sets of keys.

variable "main_access_key" {}

variable "main_secret_key" {}

variable "linked_access_key" {}

variable "linked_secret_key" {}

variable "region" {}these variables will be filled in using a secret .tfvars file containing both the access keys, this file should be named <something.tfvars and contain at least the following lines:

main_access_key = "<main_account_access_key>"

main_secret_key = "<main_account_secret_key>"

linked_access_key = "<linked_account_access_key>"

linked_secret_key= "<linked_account_secret_key>"

region = "eu-central-1"Next we will set up the first AWS account with a few resources.

Create a first main.tf file to describe the resources for the main account.

# main.tf

provider "aws" {

region = "${var.region}"

access_key = "${var.main_access_key}"

secret_key = "${var.main_secret_key}"

}

#########################

# lots of resources here

##########################

########

## EIP

########

resource "aws_eip" "blogpost_nat_eip" {

instance = "${aws_instance.blogpost_nat.id}"

vpc = true

}The full setup of resources, including vpc, subnets and instances can be found here, but the eip resource will be referenced later on.

The linked.tf file will be used to reference public ip addresses from both our main AWS account and the Azure account :

# linked.tf

# Specify the provider and access details

provider "aws" {

region = "${var.region}"

access_key = "${var.linked_access_key}"

secret_key = "${var.linked_secret_key}"

alias = "link"

}We added an alias to differentiate between account, note that this alias should only be defined if there is another account without the alias tag. We can now start to create resources as usual, but with ` provider = “aws.link”` so terraform knows in which account it should launch the resources.

In this linked account we will create a security group that allows the EIP of the NAT-instance on the main account to access ports 22 and 80 on the instance on the linked account.

########

## SG

########

resource "aws_security_group" "blogpost_link_sg" {

provider = "aws.link"

name = "blogpost_link_sg"

vpc_id = "${aws_vpc.blogpost_linked_vpc.id}"

# HTTP access from main account

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["${aws_eip.blogpost_nat_eip.public_ip}/32"]

}

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["${aws_eip.blogpost_nat_eip.public_ip}/32"]

}

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["${azure_instance.MysqlServer.vip_address}/32"]

}

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["${azure_instance.MysqlServer.vip_address}/32"]

}

# outbound internet access

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}Since resource names have to be unique, we can now just reference resources created in other accounts.

Now all that is left is to hit the apply button and have terraform provision it all.

terraform apply -var-file <path-to-secret-tfvars-file>